Note

This tutorial is available as a Jupyter notebook. Download notebook

Tutorial 07: Hyperparameter Tuning#

🟡 Intermediate — Familiarity with ML concepts helpful

Learn systematic approaches to finding optimal hyperparameters for your boosted models.

What you’ll learn#

Important hyperparameters and their effects

Grid search and random search

Best practices for tuning

Avoid common pitfalls

[1]:

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV, RandomizedSearchCV

from scipy.stats import uniform, randint

from boosters.sklearn import GBDTRegressor

Key Hyperparameters#

Parameter |

Default |

Effect |

Tuning Range |

|---|---|---|---|

|

100 |

Number of trees |

50 - 1000+ |

|

0.3 |

Step size |

0.01 - 0.3 |

|

6 |

Tree complexity |

3 - 10 |

|

1.0 |

Row sampling |

0.5 - 1.0 |

|

1.0 |

Column sampling |

0.5 - 1.0 |

|

1.0 |

L2 regularization |

0 - 10 |

[2]:

# Generate data

X, y = make_regression(n_samples=2000, n_features=20, noise=5.0, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Baseline Model#

[3]:

# Baseline with defaults

baseline = GBDTRegressor()

baseline_scores = cross_val_score(

baseline, X_train, y_train,

cv=5, scoring='neg_root_mean_squared_error'

)

print(f"Baseline CV RMSE: {-baseline_scores.mean():.4f} ± {baseline_scores.std():.4f}")

Baseline CV RMSE: 49.3708 ± 1.2893

Grid Search#

Exhaustive search over specified parameter grid:

[4]:

# Define parameter grid

param_grid = {

'n_estimators': [50, 100, 150],

'max_depth': [4, 6, 8],

'learning_rate': [0.05, 0.1, 0.2],

}

# Grid search (n_jobs=1 to avoid pickling issues with Rust models)

grid_search = GridSearchCV(

GBDTRegressor(),

param_grid,

cv=3,

scoring='neg_root_mean_squared_error',

n_jobs=1,

verbose=1

)

grid_search.fit(X_train, y_train)

print(f"\nBest parameters: {grid_search.best_params_}")

print(f"Best CV RMSE: {-grid_search.best_score_:.4f}")

Fitting 3 folds for each of 27 candidates, totalling 81 fits

Best parameters: {'learning_rate': 0.1, 'max_depth': 4, 'n_estimators': 150}

Best CV RMSE: 43.5707

Random Search#

More efficient for large parameter spaces:

[5]:

# Define parameter distributions

param_dist = {

'n_estimators': randint(50, 200),

'max_depth': randint(3, 10),

'learning_rate': uniform(0.01, 0.29), # 0.01 to 0.3

'subsample': uniform(0.6, 0.4), # 0.6 to 1.0

'reg_lambda': uniform(0, 10),

}

# Random search (n_jobs=1 to avoid pickling issues with Rust models)

random_search = RandomizedSearchCV(

GBDTRegressor(),

param_dist,

n_iter=20, # Number of parameter combinations to try

cv=3,

scoring='neg_root_mean_squared_error',

n_jobs=1,

random_state=42,

verbose=1

)

random_search.fit(X_train, y_train)

print(f"\nBest parameters: {random_search.best_params_}")

print(f"Best CV RMSE: {-random_search.best_score_:.4f}")

Fitting 3 folds for each of 20 candidates, totalling 60 fits

Best parameters: {'learning_rate': np.float64(0.2753383059076964), 'max_depth': 3, 'n_estimators': 121, 'reg_lambda': np.float64(4.494506741382034), 'subsample': np.float64(0.6381640465961645)}

Best CV RMSE: 39.4761

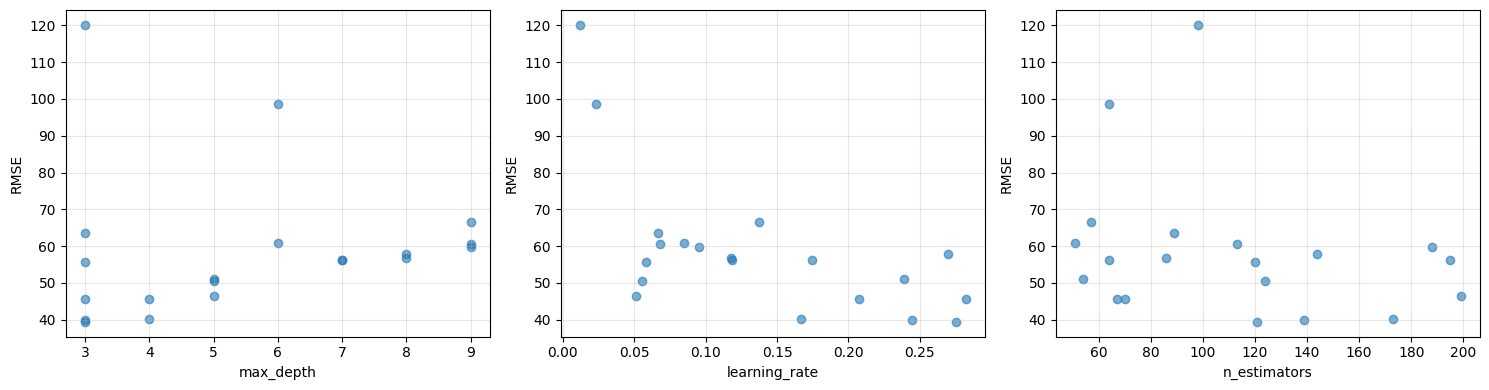

Visualize Search Results#

[6]:

# Extract results

results = random_search.cv_results_

# Plot hyperparameter vs score

fig, axes = plt.subplots(1, 3, figsize=(15, 4))

for ax, param in zip(axes, ['param_max_depth', 'param_learning_rate', 'param_n_estimators']):

x = [p for p in results[param]]

y = -results['mean_test_score']

ax.scatter(x, y, alpha=0.6)

ax.set_xlabel(param.replace('param_', ''))

ax.set_ylabel('RMSE')

ax.grid(True, alpha=0.3)

plt.tight_layout()

plt.show()

Evaluate Best Model#

[7]:

# Get best model

best_model = random_search.best_estimator_

# Evaluate on test set

from sklearn.metrics import mean_squared_error, r2_score

y_pred = best_model.predict(X_test)

test_rmse = np.sqrt(mean_squared_error(y_test, y_pred))

test_r2 = r2_score(y_test, y_pred)

print(f"Test set performance:")

print(f" RMSE: {test_rmse:.4f}")

print(f" R²: {test_r2:.4f}")

Test set performance:

RMSE: 36.7469

R²: 0.9563

Tuning Best Practices#

Start simple: Try defaults first

Learning rate first: Lower is usually better (with more trees)

Tree depth: Start with 4-6, increase if underfitting

Regularization: Add if overfitting (subsample, colsample, reg_lambda)

Final tuning: Fine-tune n_estimators with early stopping

[8]:

# Recommended tuning workflow

print("Recommended tuning order:")

print("1. Set learning_rate=0.1, n_estimators=1000 (with early stopping)")

print("2. Tune max_depth and min_child_weight")

print("3. Tune subsample and colsample_bytree")

print("4. Tune reg_lambda and reg_alpha")

print("5. Lower learning_rate, increase n_estimators")

Recommended tuning order:

1. Set learning_rate=0.1, n_estimators=1000 (with early stopping)

2. Tune max_depth and min_child_weight

3. Tune subsample and colsample_bytree

4. Tune reg_lambda and reg_alpha

5. Lower learning_rate, increase n_estimators

Summary#

In this tutorial, you learned:

✅ Key hyperparameters and their effects

✅ Grid search for small parameter spaces

✅ Random search for larger parameter spaces

✅ Best practices for efficient tuning

Next Steps#

Tutorial 08: Explainability — Interpret model predictions

Tutorial 09: Model Serialization — Save and load models